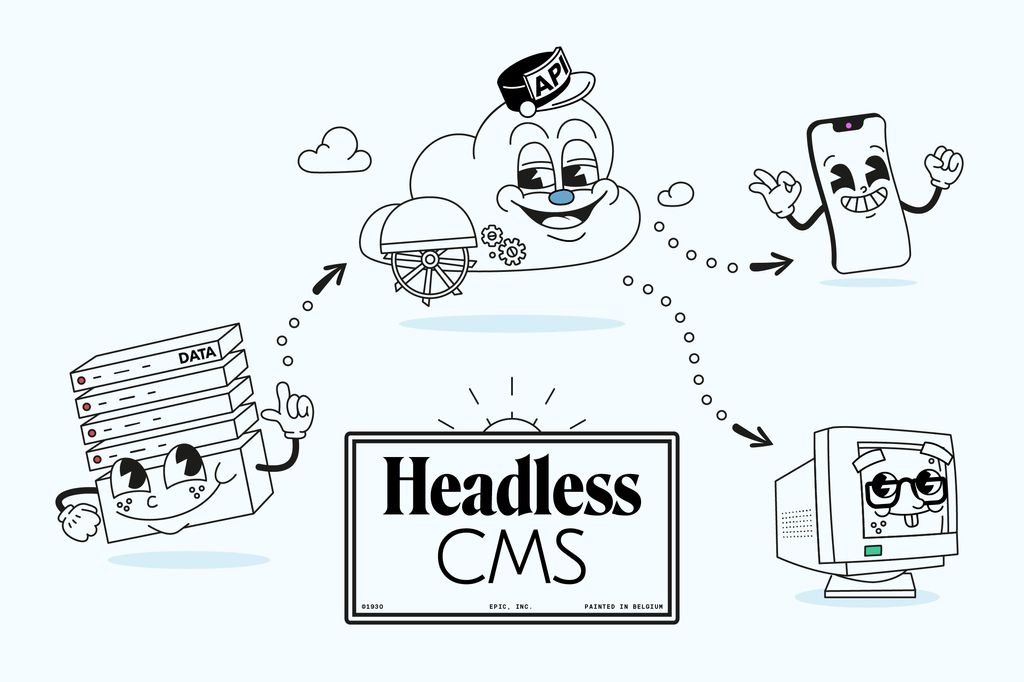

Headless & Wordpress

Understand our infrastructure

The province of Liège approached us to come up with a tablet app to tackle physical and mental health issues in children. The app was scheduled to be deployed in all primary schools of the province.

Want to know more about the end result? Check out Tip Top Kids case study

During the pitch we had imagined a whole series of features which required us to make technical choices early on in the project. The first step was therefore to do a little R&D, especially since we had to give technical specifications the client for them to purchase the appropriate tablets to run the experience.

At EPIC we’re familiar with web technologies and over the years we’ve shipped quite a few web games and experiences using technologies such as HTML5 and WebGL.

So it was natural for us to initially opt for a PWA approach. However, we soon realized that the web platform wasn’t quite up to the level we had expected. A lot of standards we wanted to use were in fact incomplete or simply hadn’t been implemented yet (WebXR, Bluetooth API…).

Upon realizing that, we shifted towards a full-native stack, using Unity. We’d been toying with that engine for a while then, and decided to give it a go on a real production project!

So far so good (in terms of tech choices), but it was honestly not a smooth ride at first. Being used to developing web applications, we soon realized pretty big difference when developing with Unity for native platforms.

“Well, that’s a nice surprise”:

👍 C# is actually easy to grasp especially if you have some experience in Typescript

👍 You can edit stuff while the app is running

“Well, that sucks”

👎 Debugging and deploys are slow and painful

👎 Testing AR is a torture

👎 There is a lack of solid open-source libraries

👎 Good luck with Git LFS and conflicts… we tried following best practices and all, but we had quite a few issues nonetheless

👎 Applying classic design patterns is pretty hard when you have half of the app done with code and other half made with GUI

👎 Unity can crash easily… and often does multiple times a day

When considering the Augmented reality solution we were going to use, ARKit and ARCore seemed more or less equivalent. So we choose which one to use based on other criteria: device compatiblilty.

Android seemed to have a broader choice of hardware and simpler deployment / updating processes …and from the list of supported devices by Google, the Samsung Galaxy Tab S4 appeared to check all the boxes in term of “best” device.

This being said, that is a choice will carefully re-assess for each new project in the future as both AR technologies have their advantages.

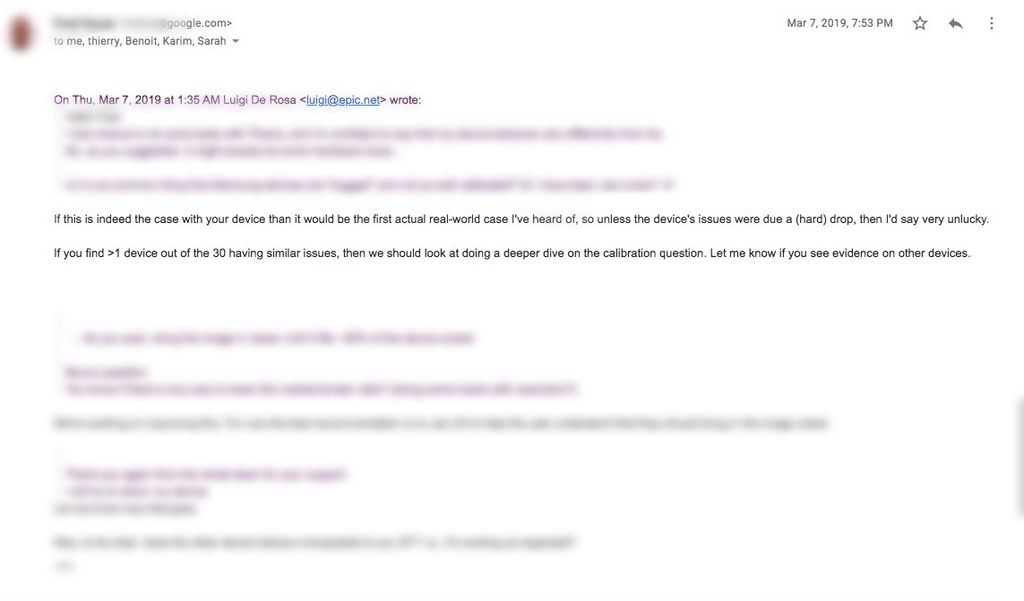

We were having huge issues running ARCore on the tablet, with massive lags and lack of stability in feature detection tracking. We spent days and nights (mostly days) debugging this, without figuring what the problem was.

We were lucky to get assistance from one of the Google engineers working on the ARCore project and the funny thing is that the problem was just bad luck : we had the huge misfortune of having a malfunctioning device during the development phase.

As soon as we replaced it, * Poof*, everything started working perfectly !

Once we decided to go with Android, we focused on key functionalities such as recognizing the images and their distance. Which raised a major question: True augmented reality or simple image recognition by markers?

We tested a whole lot of solutions: ARCore, Image Recognition, Wikitude, ARToolKit, Arcolib, EasyAR, Maxst,… (the list goes on and on). When one solution wouldn’t work at all, another, although super stable, would cost twice the overall cost of the whole project…not a great way to keep budget under control…Welcome to the “3rd party” party!

While simple image recognition gave great results for one or two images, the detection of 4 images simultaneously presented major issues (for instance, the size of each image on the screen was no longer large enough).

The advantage of a real augmented reality solution was the ability to detect a close-up image, to leave a marker in the environment and then move onto the next image.

The (major) inconvenience: it’s not enough to locate the tablet in front of the image. In fact, without a minimum amount of information about the real environment, ARCore can neither detect the images nor estimate distances, scales, etc.

A preliminary ‘scan’ test is therefore necessary, even though practically speaking, this proved to be challenging in terms of user experience (tutorial, explanations, videos, overlays, audio instructions…?) for our target users ( excited primary school children).

The project was very ambitious. Not so much from a technical point of view, but because of its target audience: 8- and 9-year-olds playing together in groups of 3 or 4. We had to make sure to:

We teamed up with psychologists and teachers from the province to build the experience and interface of the app.Here’s just some of the UX solutions we decided to go for:

In the game we integrated an existing character as the protagonist: an older brother kind of character called TipTop.

His main goal? Helping our heroes to achieve the various missions of the game. His role would be to explain the rules, define whose turn it is to play; help or give guidance when needed.

We created TipTop from an existing 2D illustration, then modeled him in Blender, and animated him through motion capture technology (thanks to our friends at Mysis!)

We used Blender extensively in order to create videos, images and 3D model assets to be used in the game.

Here are some of the assets we created:

For some missions in the game, the children are required to find special objects hidden somewhere in the school. We used Estimote proximity beacons which use Bluetooth Low Energy protocol.

Reading the bluetooth RSSI helped us estimating the distance between the tablet and the beacon. As the RSSI value is pretty unstable by nature we tried to remove the noise using a Kalman filter implementation. We ported it in C# from a JavaScript implementation.

The challenge of the last level: Having a collaborative game played in real time while using augmented reality.

It’s not rocket science it seems. A Raspberry Pi creates a Wi-Fi access point and embeds a small Node.js server with WebSocket. ?

Welcome back to the “third-party” party! Another difference between “web-vs-unity” surprised us: the community spirit behind the native development tools. Sure, there are forums, mutual help and so on, nonetheless it’s far from being open source.

And for good reason. We can straight away sense that we are in a profit making environment where there’s potentially a lot of money being made, all the more so given the monopoly in the market. ¯\_(ツ)_/¯

In short, we went back to Google and/or Assets Store to find the perfect package.

Regarding the server, it gave us the opportunity to use TypeScript.

As for the client… we discovered “threads”!!!

In JavaScript, the asynchrony is managed with promises and callbacks. But in C#, between Unity and .NET, how to execute code from a background thread within the main thread of Unity?

Good-morning coroutines, invoke and other queues!

Of course, the whole experience has been tested forward, backwards and sideways many times at EPIC…but we still needed to make sure we had real-user testing to uncover unexpected behavior and usage.

Luckily for us, it was not very hard to recruit kids to test the game. We even had the opportunity to make several large-scale sessions in different schools to make sure nothing was left to chance.

Once again, we realized that even if a whole team of digital experts have extensively tested a digital experience...10 kids will always lead to opening a fe more debugging tickets in Gitlab!

This project was huge fun and kept us busy for several months.It allowed us to get away from the intangible world of 100% digital products and to create something physical where we could directly see the impact on the end users.

Playing with native development tools showed us a side of our work we rarely had the opportunity to explore at the time and we sure learned a lot.

Side note: the game is still being rolled out in all schools of the region in 2022/2023 and keeps getting real good feedback from all users.